Recruiting industry analyst Rob McIntosh believes “AI recruiting” is the future of recruiting. Ah, another future of recruiting article! Another tome in the latest assembly line of predictions that the profession will be elevated to the one where Supreme Bot Beings sit atop the Totem of Talent. Alas, being a human recruiter is no longer considered to be sexy enough for the Futurists.

Actually, automated recruiting isn’t what Rob is about, efficient recruiting is. We’ll get back to this notion in a few paragraphs.

It is very easy to be snookered in by the sweet smell of technology, unicorn valuations, 30 under 30 lists – and anything that comes to the masses via Google. But allow me to offer another perspective to this future of recruiting discussion.

Artificial Stupidity

If there’s artificial intelligence, then by logic there has to be artificial stupidity – as in taking the Word of the Bot as Gospel. People, this is a very slippery slope that portends to push aside human expertise, experience, and compassion. Sure, the system can learn, the AlphaGo architecture is a unique extension of previous AI architectures (more about this soon), but humans have a funny way of turning logic on its side and playing a hunch that has a deeper, more robust meaning than any Monte Carlo tree search fueled by “…a huge amount of compute power” can make. Again, more about this later.

Just to be clear, I’m not against #BotRecruiting for the repeatable, scalable types of roles that take up so much of a Sourcer’s or Recruiter’s time, with or without pipelines and talent communities. Just the opposite, I’m very bullish on #AIrecruiting, addressing 68% of all hiring that isn’t significantly specialized (this is the plus or minus one standard deviation of all roles) based upon the range of problems to be solved when on the job.

I’m talking about large-scale hiring in, for instance, customer service, early career sales, retail, warehousing, even entry-level software development, where data is plenty, efficiency is a key goal, and humans are susceptible to injecting bias into the hiring process. I’m also bullish about an #AIrecruiting system that cultivates the digital crumbs and creates “probabilities of behavior” that can be used by human recruiters at some point in the process. We’re getting there tech-wise, but have a long way to move beyond black and white stones.

As promised earlier, one thing that Rob missed out on was describing AlphaGo’s architecture (it’s a darn good read), and why its performance is more evolved than previous attempts at building artificial intelligence solutions to games:

“…it combines a state-of-the-art tree search with two deep neural networks, each of which contains many layers with millions of neuron-like connections. One neural network, the “policy network”, predicts the next move, and is used to narrow the search to consider only the moves most likely to lead to a win. The other neural network, the “value network”, is then used to reduce the depth of the search tree — estimating the winner in each position in place of searching all the way to the end of the game.”

See what AlphaGo is doing with the parallel networks? Assessing hunches…feelings…ESP is what many recruiters call it. With experience, our hunches, fueled by many different scenarios and outcomes from the past, produce a higher probability of the likelihood of success, and with some hunches we learn that they don’t produce a desired outcome. Same with AlphaGo, as long as there’s more computing power to drive the parallel nature of the algorithm.

“Of course, all of this requires a huge amount of compute power, so we made extensive use of Google Cloud Platform, which enables researchers working on AI and Machine Learning to access elastic compute, storage and networking capacity on demand. In addition, new open source libraries for numerical computation using data flow graphs, such as TensorFlow, allow researchers to efficiently deploy the computation needed for deep learning algorithms across multiple CPUs or GPUs”

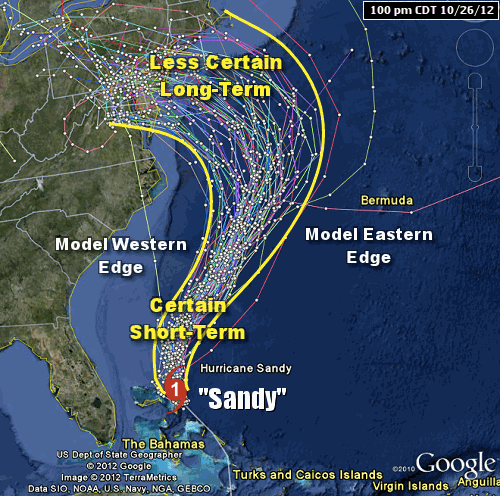

Let me put this another way: Remember the post-mortem analysis of meteorologists who had tried to predict the path of Hurricane Sandy? The picture below details all the models of possible paths based on a tremendous amount of data collected over decades. These models were created using a variety of simulations running on some of the most brutish computers on the planet. Yet we remember what happened, and the cost? Simulations and predictions are just that.

Each “Hurricane Sandy” adds more data and new sets of rules (learning) that enrich the model and change the hunches. Yet we all know that even the best model results in catastrophic damages. Sometimes the recruiting and hiring of the right person truly is a confluence of hunches, to an experienced recruiter, almost leaps of faith. This #AIrecruiting sure isn’t easy.

In 7 Trends for artificial intelligence in 2016: ‘Like 2015 on steroids’, Andrew Moore, Dean of Carnegie Mellon’s School of Computer Science notes:

“One thing I’m seeing among my own faculty is the realization that we, technologists, computer scientists, engineers who are building AI, have to appeal to someone else to create these programs. When coming up with a driverless car, for example, how does the car decide what to do when an animal comes into the road? When you write the code there’s the question: How much is an animal’s life worth next to a human’s life? Is one human life worth the lives of a billion domestic cats? A million? A thousand? I would hate to be the person writing that code.”

Consider the #AIrecruiting software developer, if they are an avowed animal lover, do they play a Death Race 2000 scenario in their head while coding? This is one of many issues with developing systems to replace or augment humans.

Open source, open stack, and APIs too often mask the fact that there are human beings on the other side of the application. Artificial intelligence, machine learning, intelligence systems, and automation so good that they’ll replace human beings, are not by themselves the seeds of success but are foods that when consumed unchecked further the divide between people and technology. The carrot that is held in front of us, that will have more time to do the things we love, isn’t necessarily reality. Just like the addictiveness of drugs, alcohol, and cigarettes, technology draw us in and not let go. Ask me how often I’m hiking on a lonesome trail only to come across people glued to their smartphones.

More data does not mean necessarily translate into better decisions when the human brain is conditioned to trust the technology rather than the brain.

Perfect marriages end in divorce: what’s going to happen when all these perfect Bot selected people begin working with other perfect Bot selected people and one of them farts in a meeting? Or selects “Reply All” with an unfortunate joke? Or votes for Trump/Bernie/Clinton and posts it for the world to see? Or has sex with a co-worker on their desk, then breaks up with them the next week leading to a barrage of social media stupidity? Will the “person” who pushed these Bot hires through be dinged for a bad hire? Or will the #AIrecruiting system be forced to take a timeout?

Back to the use of AI in recruiting: the question to ask is what do we not do especially well right now? Let’s, as a profession, talk now about the rules that govern these tasks and continue to tune them until we reach consensus on best practices. Let’s decide upon where automation in recruiting makes sense for the people who are likely to be impacted by automation. [clickToTweet tweet=”‘Yes, let’s put more human back into recruiting.’ via ASR, Artificially Stupid Recruiting by @levyrecruits #SourceCon” quote=”‘Yes, let’s put more human back into recruiting.’ “]

The journey from predicting the movement of black and white stones to the behavior of people replete with an unending number of human variables is a huge responsibility for our profession. Rather than get all worked up over a technology to replace people, think about how #AIrecruiting can serve to re-focus organizations on how important recruiting should be, to not only the solvency of companies but to the lives of the people we touch. It is the ethical thing for us to do.

Let’s end this tome with the last line of Aldous Huxley’s Brave New World – a fitting be careful what you wish for rejoinder to what happens when you implicitly trust technology:

“Slowly, very slowly, like two unhurried compass needles, the feet turned towards the right; north, north-east, east, south-east, south, south-south-west; then paused, and, after a few seconds, turned as unhurriedly back towards the left. South-south-west, south, south-east, east…”